When PostgreSQL Logical Replication Nearly Broke My Weekend (And What I Built Instead)

You know that sinking feeling when a "simple" database migration turns into a 3-day debugging nightmare? That was my last weekend.

What started as a straightforward PostgreSQL logical replication for 81 million records became a masterclass in hidden configuration gotchas and operational blind spots.

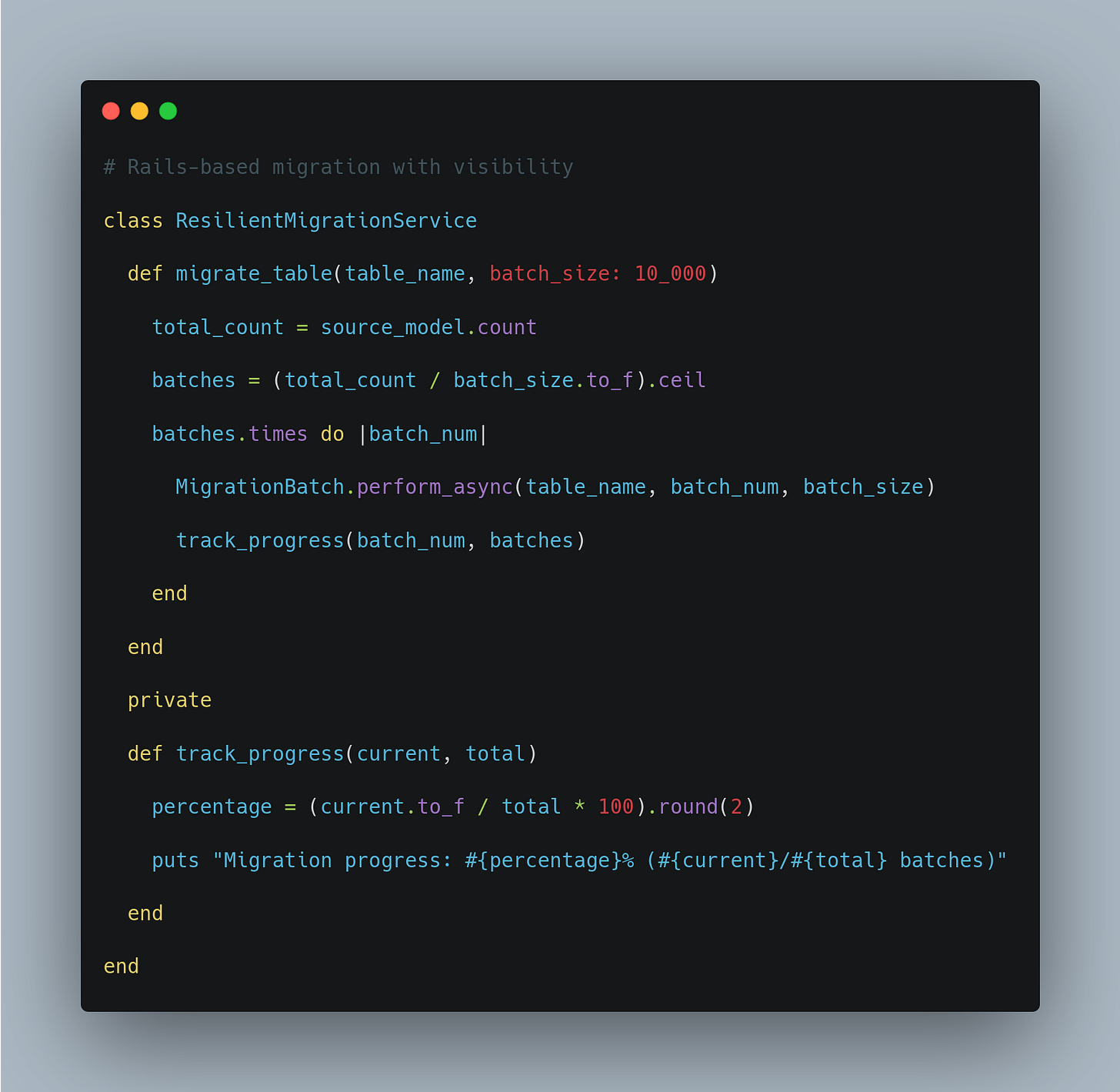

In this issue, I'll take you through why I ditched database-level replication for a Rails-based migration system that actually works. No theory dumps – just real problems, real solutions, and a migration strategy you can trust.

🚀 What you'll learn:

Why even "successful" database migrations can be operational disasters

How to build observable, resumable data migrations with Rails

When to choose application-level solutions over database features

A battle-tested migration architecture that eliminates guesswork

The Problem

Here's what we were dealing with: migrating two massive join tables (photo_kids and photo_sections) containing 81+ million records. PostgreSQL logical replication seemed like the obvious choice.

💡 Warning Signs:

Zero visibility into migration progress during 24+ hour windows

Configuration timeouts happening at mysterious user-role levels

Multiple days of debugging for what should be infrastructure-level operations

No way to resume from failure points without starting over

The Journey: From Problem to Solution

Step 1: Recognizing the Operational Blindness

Before:

After:

🎯 Impact:

Real-time progress tracking instead of 24-hour blackouts

Clear batch-level success/failure indicators

Predictable timeline estimates based on processing rates

Step 2: Building Resilience Into Every Layer

The breakthrough came when I realized that reliability trumps raw performance for large migrations:

The Aha Moment

The key realization hit me during hour 18 of staring at a stuck PostgreSQL process: observability is more valuable than theoretical performance.

When you can't see what's happening, you can't make informed decisions. When you can't resume from failures, you're always one configuration edge case away from starting over.

Real Numbers From This Experience

Before: 24+ hours with zero progress visibility

After: Real-time batch completion with <15 minute recovery windows

Debugging time: 3 days → 0 days (failures are self-explanatory)

Knowledge dependency: Deep PostgreSQL expertise → Standard Rails patterns

The Final Result

🎉 Key Improvements:

100% migration visibility with real-time progress tracking

Automatic resume from any failure point

Built-in data integrity validation

No specialized PostgreSQL expertise required for troubleshooting

Tomorrow Morning Action Items

1. Quick Wins (5-Minute Changes)

Add progress logging to any existing batch jobs

Create a simple MigrationStatus model for tracking operations

Document your current migration procedures (you'll thank yourself later)

2. Next Steps

Build a small proof-of-concept with 1000 records

Test failure scenarios and recovery mechanisms

Integrate with your existing monitoring infrastructure

Your Turn!

The Migration Strategy Challenge

Here's a simplified version of the problem. How would you improve this basic migration approach?

💬 Discussion Prompts:

What happens if this fails halfway through 1 million records?

How would you add progress tracking without changing the core logic?

What's your strategy for validating the migration completed successfully?

🔧 Useful Resources:

ActiveRecord Import Gem- for bulk operations

Found this useful? Share it with a fellow developer who's ever been burned by a "simple" database migration! And don't forget to reply with your own migration war stories.

Happy coding!

Tips and Notes:

Note: All code examples focus on the patterns rather than production-ready implementations

Pro Tip: Always test your migration rollback strategy before you need it

Remember: Visibility and reliability beat raw performance for critical operations