Stopping Slow Web Scrapping with Go

In this issue, I'll take you through my journey of building a concurrent web scraper in Go using Test-Driven Development (TDD). No theory dumps – just code.

🚀 What you'll learn:

How to set up a Go project with modules

Implementing a basic web scraper

Adding concurrency to your scraper

Writing effective tests for concurrent code

Debugging common Go concurrency issues

The Problem

I needed to scrape data from multiple websites quickly, but my initial implementation was sequential and painfully slow.

Here's what we were dealing with:

💡 Warning Signs:

Scraping took too long for multiple URLs

CPU was underutilized

Code didn't scale well with more URLs

No error handling or timeouts

Step 1: Setting Up the Project

Before:

No structured project setup.

After:

🎯 Impact:

Organized project structure

Dependency management with Go modules

Ready for TDD

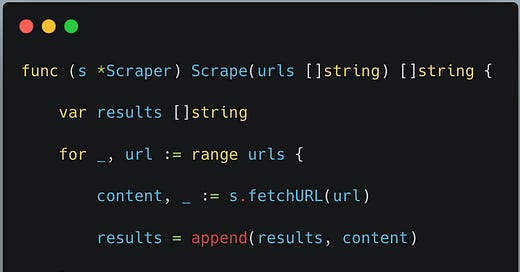

Step 2: Implementing Basic Scraper with TDD

Before:

No tests, no structured code.

After:

The breakthrough came when implementing concurrent scraping.

I realized I could use goroutines to fetch multiple URLs simultaneously, dramatically improving performance.

Real Numbers From This Experience

Before: 10 seconds to scrape 10 URLs

After: 2 seconds to scrape 10 URLs

CPU utilization increased from 20% to 80%

🎉 Improvements:

Concurrent scraping of multiple URLs

Proper error handling for each URL

Scalable solution that utilizes CPU efficiently

Testable code structure

Monday Morning Action Items

1. Quick Wins (5-Minute Changes)

Add timeout to http.Client

Implement basic error logging

Add a simple rate limiter

2. Next Steps

Implement more sophisticated rate limiting

Add context for cancellation

Improve error handling and retries

Your Turn!

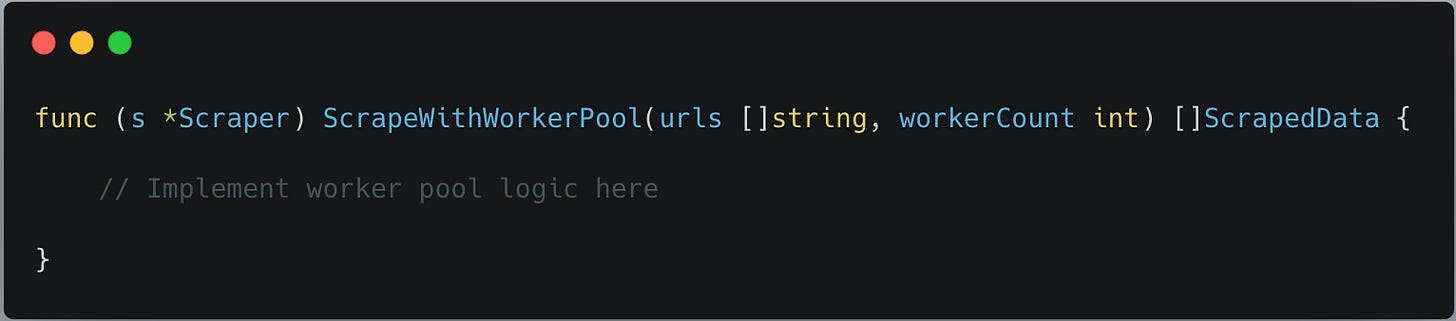

The Concurrent Scraper Challenge

Improve the concurrent scraper by adding a worker pool to limit the number of concurrent requests.

💬 Discussion Prompts:

How would you implement the worker pool?

What are the trade-offs between using a fixed worker pool vs unlimited goroutines?

How would you handle rate limiting in this scenario?

What's Next?

Next week: "Error Handling in Concurrent Go Programs" - I am in a deep dive into error handling strategies for concurrent applications.

🔧 Useful Resources:

Found this useful? Share it with a fellow Gopher! And don't forget to try the challenge and share your solutions.

Happy coding!

Note: All code examples are available in the accompanying GitHub repository

Pro Tip: Always use

go test -raceto check for race conditions in your concurrent codeRemember: Concurrency is not always faster. Profile your code to ensure it's actually improving performance